Difference between revisions of "Overview rebind workflow"

LornaMorris (talk | contribs) (→Overview of the reBiND workflow) |

LornaMorris (talk | contribs) (→Overview of the reBiND workflow) |

||

| (9 intermediate revisions by 2 users not shown) | |||

| Line 5: | Line 5: | ||

This figure shows the general structure of the reBiND processing architecture. It shows each step in the workflow from submission of a dataset, preparation and processing to its final publication. | This figure shows the general structure of the reBiND processing architecture. It shows each step in the workflow from submission of a dataset, preparation and processing to its final publication. | ||

| − | Before the data can be uploaded into the reBiND data portal several steps are required to prepare the data and map it to an appropriate schema. We have used the ABCD - Access to Biological Collections Data - schema [ | + | Before the data can be uploaded into the reBiND data portal several steps are required to prepare the data and map it to an appropriate schema. We have used the ABCD - Access to Biological Collections Data - schema ([[ABCD_Access_to_Biological_Collection_Data,_Standard|ABCD in reBiND]]). ABCD is a common data specification for biological collection units, including living and preserved specimens and field observations. |

| − | The majority of data we received from contributing scientists was in spreadsheet format, which can easily be imported into a relational database. Once the data is in a rational | + | The majority of data we received from contributing scientists was in spreadsheet format, which can easily be imported into a relational database. Once the data is in a rational database we used the BioCASe Provider Software (BPS) [http://wiki.bgbm.org/bps]. The BPS supports many different SQL based databases and these databases offer imports for different file types. In order to generate the XML files the columns from the relational database have to be mapped to the corresponding concepts of ABCD. At this point the expert knowledge of the contributing scientists is needed. At the end of the mapping process an ABCD XML document is generated. |

Once the data has been converted into an XML format it can be uploaded onto the reBiND web portal. After the XML document has been uploaded, the correction process can be started by the Content Administrator. | Once the data has been converted into an XML format it can be uploaded onto the reBiND web portal. After the XML document has been uploaded, the correction process can be started by the Content Administrator. | ||

| Line 13: | Line 13: | ||

The grey box in the figure highlights the steps between upload of the data into the reBiND portal and the validation, correction and review steps prior to publication of the data. The Correction Manager processes several correction modules, each for a specific purpose. When any of the modules makes any changes to the document or encounters problems, these issues are recorded in a document, so they can later be reviewed. When the modules are finished running the corrected document is loaded back into the reBiND system. At this stage the document should be valid or if the set of correction modules were unable to fix any problems encountered the remaining validation errors will be marked. | The grey box in the figure highlights the steps between upload of the data into the reBiND portal and the validation, correction and review steps prior to publication of the data. The Correction Manager processes several correction modules, each for a specific purpose. When any of the modules makes any changes to the document or encounters problems, these issues are recorded in a document, so they can later be reviewed. When the modules are finished running the corrected document is loaded back into the reBiND system. At this stage the document should be valid or if the set of correction modules were unable to fix any problems encountered the remaining validation errors will be marked. | ||

| − | The next step is the review. In the issue list produced by the Correction Manager issues of three different severity level are flagged: | + | The next step is the review. In the issue list produced by the Correction Manager issues of three different severity level are flagged. These are: |

* information (a change was made that is not expected to cause any problem) | * information (a change was made that is not expected to cause any problem) | ||

* warning (a change has been made or a problem with the content has been detected that can not be changed automatically but it has no consequence for the validity of the document) | * warning (a change has been made or a problem with the content has been detected that can not be changed automatically but it has no consequence for the validity of the document) | ||

* error (a problem with the content has been detected that causes the document to be invalid and it can not be fixed automatically). | * error (a problem with the content has been detected that causes the document to be invalid and it can not be fixed automatically). | ||

| − | The issues should be reviewed. Some of the problems could be the result of some technical issues and may be fixed by specifying new correction modules. Other problems could be caused by the content errors and therefore discussion with the contributing scientist might be necessary in | + | The issues should be reviewed. Some of the problems could be the result of some technical issues and may be fixed by specifying new correction modules. Other problems could be caused by the content errors and therefore discussion with the contributing scientist might be necessary in order to fix these. |

| − | Independent from the review, a metadata document has to be created. This can be done via a dedicated web form where the user can enter information describing the dataset. The data from this form is saved in another XML file (in EML format). | + | Independent from the review, a metadata document has to be created. This can be done via a dedicated web form where the user can enter information describing the dataset. The data from this form is saved in another XML file (in [[Ecologial_Metadata_Language|EML format]]). |

Once the metadata document has been created and the changes and notes of the automated corrections have been reviewed, the data project can be published. This makes the now valid document and the metadata document publicly available. The data can now be accessed via a search form from the homepage or via data networks. In the case of the reBiND Service, the ABCD documents can be accessed via biodiversity networks like GBIF and BioCASe. A specialised module will translate the BioCASe Protocol, in which the query from these two networks are sent, into XQuery and then return the parts of the documents that are relevant to the query. | Once the metadata document has been created and the changes and notes of the automated corrections have been reviewed, the data project can be published. This makes the now valid document and the metadata document publicly available. The data can now be accessed via a search form from the homepage or via data networks. In the case of the reBiND Service, the ABCD documents can be accessed via biodiversity networks like GBIF and BioCASe. A specialised module will translate the BioCASe Protocol, in which the query from these two networks are sent, into XQuery and then return the parts of the documents that are relevant to the query. | ||

After this overview of the processing architecture of the reBiND Framework, this text will now take a closer look at the individual steps. | After this overview of the processing architecture of the reBiND Framework, this text will now take a closer look at the individual steps. | ||

Latest revision as of 02:16, 19 November 2014

Overview of the reBiND workflow

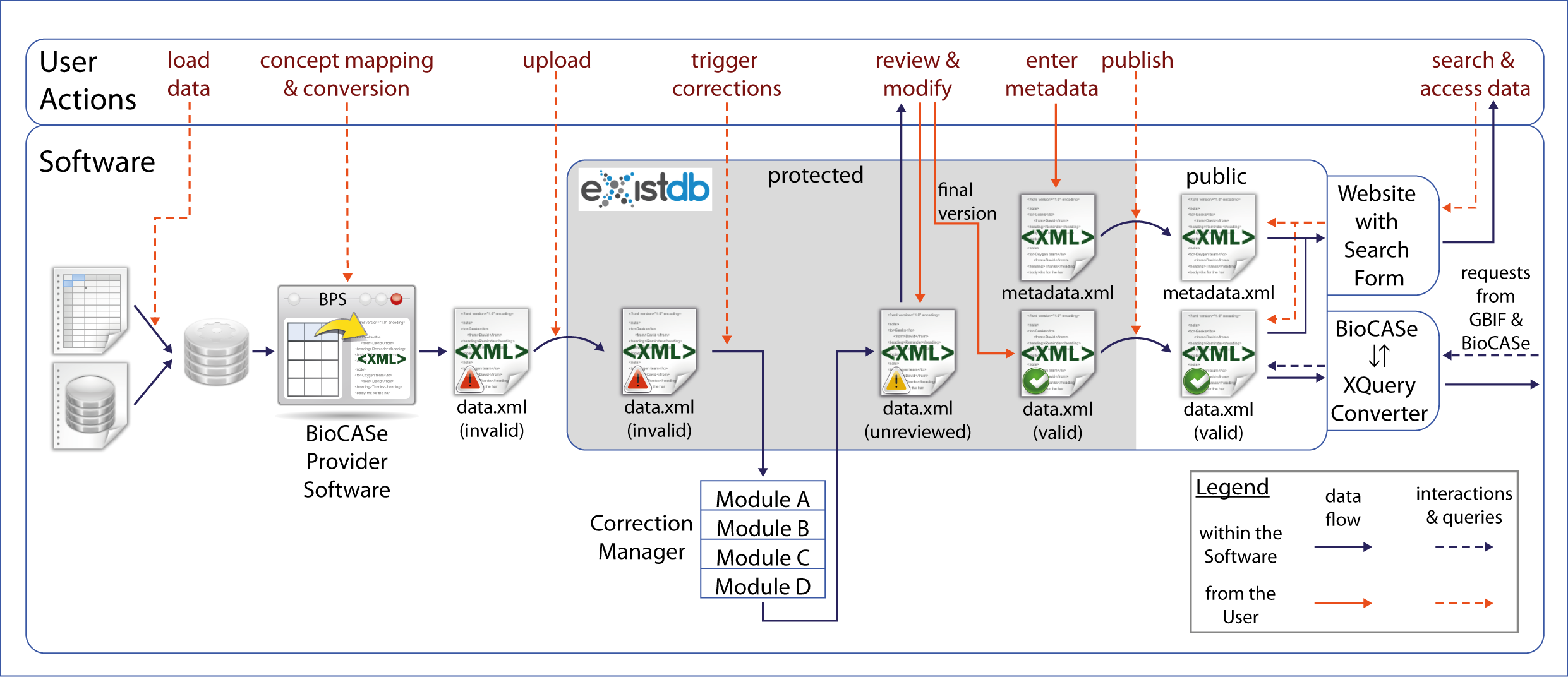

This figure shows the general structure of the reBiND processing architecture. It shows each step in the workflow from submission of a dataset, preparation and processing to its final publication.

Before the data can be uploaded into the reBiND data portal several steps are required to prepare the data and map it to an appropriate schema. We have used the ABCD - Access to Biological Collections Data - schema (ABCD in reBiND). ABCD is a common data specification for biological collection units, including living and preserved specimens and field observations.

The majority of data we received from contributing scientists was in spreadsheet format, which can easily be imported into a relational database. Once the data is in a rational database we used the BioCASe Provider Software (BPS) [1]. The BPS supports many different SQL based databases and these databases offer imports for different file types. In order to generate the XML files the columns from the relational database have to be mapped to the corresponding concepts of ABCD. At this point the expert knowledge of the contributing scientists is needed. At the end of the mapping process an ABCD XML document is generated.

Once the data has been converted into an XML format it can be uploaded onto the reBiND web portal. After the XML document has been uploaded, the correction process can be started by the Content Administrator.

The grey box in the figure highlights the steps between upload of the data into the reBiND portal and the validation, correction and review steps prior to publication of the data. The Correction Manager processes several correction modules, each for a specific purpose. When any of the modules makes any changes to the document or encounters problems, these issues are recorded in a document, so they can later be reviewed. When the modules are finished running the corrected document is loaded back into the reBiND system. At this stage the document should be valid or if the set of correction modules were unable to fix any problems encountered the remaining validation errors will be marked.

The next step is the review. In the issue list produced by the Correction Manager issues of three different severity level are flagged. These are:

- information (a change was made that is not expected to cause any problem)

- warning (a change has been made or a problem with the content has been detected that can not be changed automatically but it has no consequence for the validity of the document)

- error (a problem with the content has been detected that causes the document to be invalid and it can not be fixed automatically).

The issues should be reviewed. Some of the problems could be the result of some technical issues and may be fixed by specifying new correction modules. Other problems could be caused by the content errors and therefore discussion with the contributing scientist might be necessary in order to fix these.

Independent from the review, a metadata document has to be created. This can be done via a dedicated web form where the user can enter information describing the dataset. The data from this form is saved in another XML file (in EML format).

Once the metadata document has been created and the changes and notes of the automated corrections have been reviewed, the data project can be published. This makes the now valid document and the metadata document publicly available. The data can now be accessed via a search form from the homepage or via data networks. In the case of the reBiND Service, the ABCD documents can be accessed via biodiversity networks like GBIF and BioCASe. A specialised module will translate the BioCASe Protocol, in which the query from these two networks are sent, into XQuery and then return the parts of the documents that are relevant to the query.

After this overview of the processing architecture of the reBiND Framework, this text will now take a closer look at the individual steps.